A quarter century ago, the qubit was born

John Archibald Wheeler was fond of clever phrases.

He made the term “black hole” famous in the 1960s. He also coined the now-familiar “wormhole” and “quantum foam.” While further pondering the mystery of quantum physics at the University of Texas at Austin during the 1980s, Wheeler repeatedly uttered his favorite interrogative slogan: “How come the quantum?” And from those ponderings emerged yet another memorable phrase: “It from Bit.” That became a meme that inspired a surprising new field of physics — quantum information theory.

That theory’s basic unit, the qubit, made its scientific debut at a conference in Dallas 25 years ago. Wheeler didn’t invent the term qubit. One of his former students, Benjamin Schumacher, coined it to refer to a bit of quantum information. At the time, few people appreciated the importance of information in quantum physics. And hardly anyone realized how dramatically the qubit would drive the development of a whole new field of science and associated technology — just now on the verge of producing powerful quantum computers.

Some physicists had discussed the idea of exploiting quantum weirdness for computing much earlier. Paul Benioff of Argonne National Laboratory in Illinois showed the possibility of using quantum processes to compute by 1980. Shortly thereafter Richard Feynman suggested that quantum computers, in principle, could do things that traditional computers couldn’t. But while physicists dreamed of building a quantum computer, that quest remained purposeless until 1994, when mathematician Peter Shor of Bell Labs showed that a quantum computer could be useful for something important — namely, cracking secret codes. Shor’s algorithm provided the motivation for the research frenzy now paying off in real quantum computing devices. But it was the qubit, invented two years before Shor’s work, that provided the conceptual tool needed to make progress in such research possible.

A search for quantum meaning

When he coined the phrase It from Bit, Wheeler was concerned more with fundamental questions about physics and existence than with quantum computing. He wanted to know why quantum physics ruled the universe, why the mysterious fuzziness of nature at its most basic gave rise to the rock-solid reality presented to human senses. He couldn’t accept that the quantum math was all there was to it. He did not subscribe to the standard physicists’ dogma — shut up and calculate — uttered in response to people who raised philosophical quantum questions.

Wheeler sought the secret to quantum theory’s mysterious power by investigating the role of the observer. Quantum math offers multiple outcomes of a measurement; one or another specific result materializes only upon an act of observation. Wheeler wanted to know how what he called “meaning” arose from the elementary observations that turned quantum possibilities into realities. And so for a long time he sought a way to quantify “meaning.” During an interview in 1987, I asked him what he meant by that — in particular, I wondered if what he had in mind was some sort of numerical measure of meaning, similar to the math of information theory (which quantifies information in bits, typically represented by 0 and 1). He said yes, but he was not happy then about information processing as a fundamental feature of nature. That notion had been discussed in a popular book of the time: The Anthropic Cosmological Principle by John Barrow and Frank Tipler. Their view of information processing in the cosmos, for Wheeler, evoked an image of a bunch of people punching numbers into adding machines. “I’d be much happier if they talked about making observations,” Wheeler told me.

But not long afterward, work by one of his students, Wojciech Zurek, inspired Wheeler to begin talking about observations in terms of information. An observation provided a yes-or-no answer to a question, Wheeler mused. Is the particle here (yes) or not (no)? Is a particle spinning clockwise? Or the contrary? Is the particle even a particle? Or is it actually a wave? These yes-or-no answers, Wheeler saw, corresponded to the 1s and 0s, the bits, of information theory. And so by 1989, he began conceiving his favorite question — How come the quantum? — as how to explain the emergence of existence from such yes-or-no observations: It from Bit.

Wheeler wasn’t the first to mix information with quantum physics. Others had dabbled with quantum information processes earlier — most notably, by using quantum information to send uncrackable secret codes. “Quantum cryptography” had been proposed by Charles Bennett of IBM and Gilles Brassard of the University of Montreal in the early 1980s. By 1990, when I visited Bennett at IBM, he had built a working model of a quantum cryptographic signaling system, capable of sending photons about a meter or so from one side of a table in his office to the other. Nowadays, systems built on similar principles send signals hundreds of kilometers through optical fiber; some systems can send quantum signals through the air and even space. Back then, though, Bennett’s device was merely a demonstration of how the weirdness of quantum physics might have a practical application. Bennett even thought it might be the only thing quantum weirdness would ever be good for.

But a few physicists suspected otherwise. They thought quantum physics might someday actually enable a new, more powerful kind of computing. Quantum math’s multiple possible outcomes of measurements offered a computing opportunity: Instead of computing with one number at a time, quantum physics seemed to allow many parallel computations at once.

Feynman explored the idea of quantum computing to simulate natural physical processes. He pointed out that nature, being quantum mechanical, must perform feats of physical legerdemain that could not be described by an ordinary computer, limited to 1s and 0s manipulated in a deterministic, cause-and-effect way. Nature incorporated quantum probabilities into things like chemical reactions. Simulating them in detail with deterministic digital machinery didn’t seem possible. But if a computer could be designed to take advantage of quantum weirdness, Feynman supposed, emulating nature in all its quantum wonder might be feasible. “If you want to make a simulation of nature, you’d better make it quantum mechanical,” Feynman said in 1981 at a conference on the physics of computing. He mentioned that doing that didn’t “look so easy.” But he could see no reason why it wouldn’t be possible.

A few years later, quantum computers gained further notoriety from work by the imaginative British physicist David Deutsch. He adopted the prospect of their existence to illustrate a view of quantum physics devised in the 1950s by Hugh Everett III (another student of Wheeler’s), known as the Many Worlds Interpretation. In Everett’s view, each possibility in the quantum math described a physically real result of a measurement. In the universe you occupy, you see only one of the possible results; the others occur in parallel universes. (Everett’s account suggested that observers split into copies of themselves to continue existing in their newly created universe homes.)

Deutsch realized that the Everett view, if correct, opened up a new computing frontier. Just build a computer that could keep track of all the parallel universes created when bits were measured. You could then compute in countless universes all at the same time, dramatically shortening the time it would take to solve a complex problem.

But there weren’t any meaningful problems that could be solved in that way. That was the situation in 1992, when a conference on the physics of computation was convened in Dallas. Participants there discussed the central problem of quantum computers — they might compute faster than an ordinary computer, but they wouldn’t always give you the answer you wanted. If a quantum computer could compute 100 times faster than an ordinary computer, it would provide the correct answer only once in every 100 tries.

A very good idea

While much of the attention at the Dallas meeting focused on finding ways to use quantum computers, Schumacher’s presentation looked at a more fundamental issue: how computers coded information. As was well known, ordinary computers stored and processed information in the form of bits, short for binary digits, the 1s and 0s of binary arithmetic. But if you’re going to talk about quantum computing, bits wouldn’t be the right way to go about it, Schumacher contended. You needed quantum bits of information: qubits. His talk at the Dallas conference was the first scientific presentation to use and define the term.

Schumacher, then and now at Kenyon College in Gambier, Ohio, had discussed such issues a few months earlier with William Wootters, another Wheeler protégé. “We joked that maybe what was needed was a quantum measure of information, and we would measure things in qubits,” Schumacher told me. They thought it was a good joke and laughed about it. “But the more I thought about it, the more I thought it was a good idea,” he said. “It turned out to be a very good idea.”

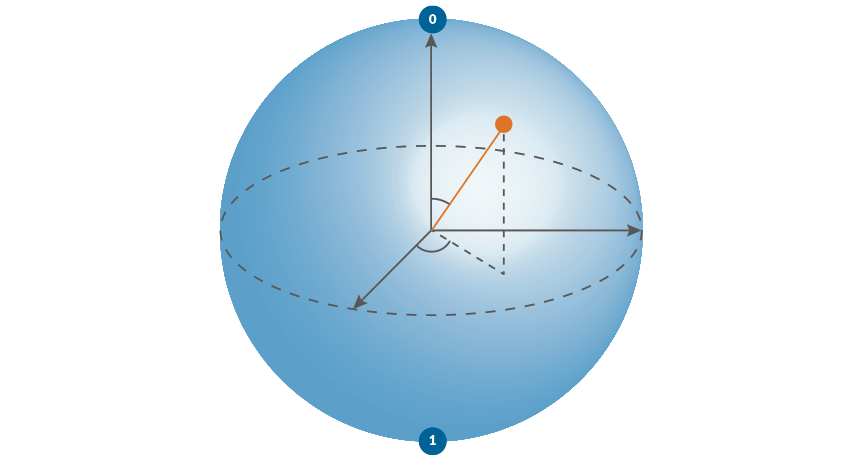

Over the summer of 1992, Schumacher worked out more than just a clever name; he proved a theorem about how qubits could be used to quantify the quantum information sent through a communication channel. In essence, a quantum bit represents the information contained in the spin of a quantum particle; it could be 0 or 1 (if the state of the particle’s spin is already known) or a mix of 0 and 1 (if the particle’s spin has not yet been measured). If traditional bits of 0 or 1 can be represented by a coin that lands either heads or tails, a qubit would be a spinning coin with definite probabilities for landing either heads or tails.

Traditional “classical” bits measure the amount of physical resources you need to encode a message. In an analogous way, Schumacher showed how qubits did the same thing for quantum information. “In quantum mechanics, the essential unit of information is the qubit, the amount of information you can store in a spin,” he said at the Dallas meeting. “This is sort of a different way of thinking about the relation of information and quantum mechanics.”

Other physicists did not immediately jump on the qubit bandwagon. It was three years before Schumacher’s paper was even published. By then, Shor had shown how a quantum algorithm could factor large numbers quickly, putting a widely used encryption system for all sorts of secret information at risk — if anyone knew how to build a quantum computer. But that was just the problem. At the Dallas meeting, IBM physicist Rolf Landauer argued strongly that quantum computing would not be technologically feasible. All the discussion of quantum computing occurred in the realm of equations, he pointed out. Nobody had the slightest idea of how to prepare a patent proposal. In real physical systems, the fragility of quantum information — the slightest disturbance would destroy it — made the accumulation of errors in the computations inevitable.

Landauer repeated his objections in 1994 at a workshop in Santa Fe, N.M., where quantum computing, qubits and Shor’s algorithm dominated the discussions. But a year or so later, Shor himself showed how adding extra qubits to a system would offer a way to catch and correct errors in a quantum computing process. Those extra qubits would be linked to, or entangled with, the qubits performing a computation without actually being involved in the computation itself. If an error occurred, the redundant qubits could be called on to restore the original calculation.

Shor’s “quantum error correction” methods boosted confidence that quantum computing technology would in fact someday be possible — confidence that was warranted in light of the progress in the two decades since then. Much more recently, the math describing quantum error correction has turned up in a completely unexpected context — efforts to understand the nature of spacetime by uniting gravity with quantum mechanics. Caltech physicist John Preskill and his collaborators have recently emphasized “a remarkable convergence of quantum information science and quantum gravity”; they suggest that the same quantum error correction codes that describe the entanglement of qubits to hold a quantum computation together also describe the way that quantum connections generate the geometry of spacetime itself.

So it seems likely that the meaning and power of quantum information, represented by the qubit, has yet to be fully understood and realized. It may be that Schumacher’s qubit is not merely a new way of looking at the relation of quantum mechanics to information, but is also the key to a fundamentally new way of conceiving the foundations of reality and existence — much as Wheeler imagined when he coined It from Bit.